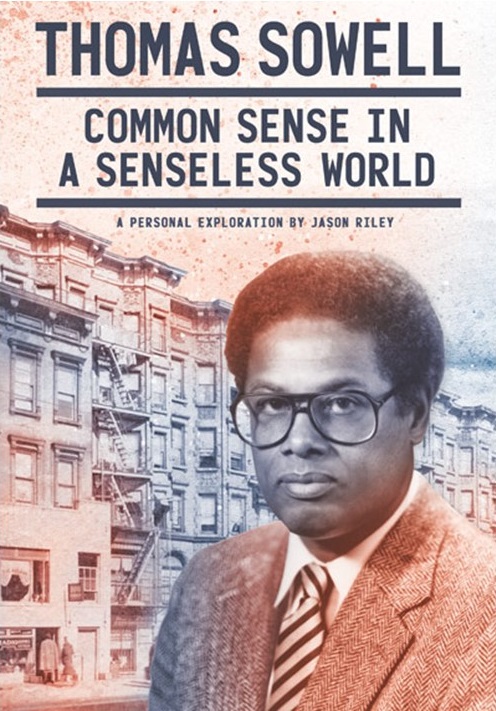

The new one-hour program “Thomas Sowell: Common Sense in a Senseless World,” is a must watch. Beautifully narrated by Jason Riley of the Wall Street Journal, it tells the amazing life story of Thomas Sowell, born in 1930 in North Carolina, raised by a great aunt after both his parents died, moved to Harlem at 8 years old, joined the Marines, went to Harvard for college and Chicago for a Ph.D., taught at Cornell and UCLA, finally settled at the Hoover Institution at Stanford University, and in the process became “one of the greatest minds,” as Riley puts it, “of the past half century.”

Free to Choose Network just announced that they are releasing this fascinating documentary “for airing on public television stations across the country – and for streaming on Amazon Prime, YouTube, and at freetochoosenetwork.org.” The public TV airings begin this month, but already the response on YouTube is huge with over 2 million views in just one week.

There is so much to like about this film. Visuals stream across the screen with a perfect selection of background music, as we see photo after photo, including a 1940 vintage rotary telephone which young Tommy wished he had. And Jason Riley takes us on location to 720 St Nicholas Ave in Harlem where Sowell lived, and to the front of the Harlem Branch of the New York Public Library, where a slightly older friend, Eddie Mapp, told him about library cards and books, and changed his life forever.

We watch teachers in the classroom helping little kids learn, illustrating Sowell’s long-term interest in the value of education. We hear friend Walter Williams reminisce about the days when he and Sowell were the only two Black conservatives, so people would say that they should not travel on a plane together. Larry Elder speaks on how Sowell causes “people to rethink their assumptions,” Stephen Pinker on how Sowell will never compromise just to appear agreeable, Victor Davis Hanson on how “Tom is an empiricist,” and Peter Robinson on the many books Sowell published after turning 80. They all talk about what Thomas Sowell means to them and what he is like.

Tom’s special gift for explaining things and sharing with others is illustrated with Rapper Eric July and his Backwordz style of music. We hear Dave Rubin ask Sowell in a TV interview about why he changed course away from Marxism early in life: “So what was your wake up to what was wrong with that line of thinking?” asks Rubin, and Sowell simply answers “Facts,” with maybe the best one-word answer in history. And that sets the stage for much of Tom Sowell’s unique empirical, eye-opening approach to research and writing, whether about economics, race, history, discrimination, education or culture. Throughout the program Jason Riley shows time after time that Sowell is one of those very rare people: “an honest intellectual.”

Throughout the show we see quotes on the screen from Sowell: “My great fear is that a black child growing up in Harlem today will not have as good a chance to rise as people in my generation did, simply because they will not receive as solid an education.” He talks of a turning point in the 1960s after which there was no longer a stigma from being on welfare, and urban schools went downhill. His latest book, Charter Schools and their Enemies, recounts the story of the Success Academy in New York City and holds out hope for the future.

Tom’s long held view is that there are no “solutions” to the difficult real world problems we face as a society; there are only “tradeoffs” where you try to get as close as possible to the optimal answer. Here the film develops a clever analogy with Tom Sowell’s avid hobby—photography—where one is always changing and adjusting the aperture or the focus to improve the image, but never reaching an all-encompassing solution.

The film squeezes in meaningful interludes, such as his common sense book about Late-Talking Children based on a very personal story, or the saga of Steinway pianos where freedom to set up a new firm in America and try out new ideas was essential.

The film includes his time at the Hoover Institution where instead of teaching thousands in classrooms he reaches millions with his books and his welcome emphasis on free markets and personal responsibility. He was drawn there in part by his teacher when at Chicago, Milton Freidman.

Lucky for us, Jason Riley is about to publish a new book about Thomas Sowell. It is a biography called Maverick. I have had a chance to take a peak, and it is wonderful, a perfect book to read after the movie. Indeed, it is a must read.