On Friday May 6, we held our annual Monetary Policy Conference at the Hoover Institution at Stanford. This year it was entitled: “How Monetary Policy Got Behind the Curve and How to Get Back,” and the title turned out to describe the theme of the conference, the papers presented, and the discussion from the audience remarkably well. Michael Bordo, John Cochrane and I organized the conference. Condoleezza Rice, Director of the Hoover Institution and former Secretary of State, gave the motivating opening remarks, mentioning monetary policy rules and bringing in the complicating factors in Ukraine. Monika Piazzesi, Stanford Professor of Economics, concluded with a great dinner talk called Inflation Blues: The 40th Anniversary Reissue?

The full agenda is shown here https://www.hoover.org/sites/default/files/agenda_may_6_2022-hoover_monetary_policy_conference_final.pdf We were pleased that so many knowledgeable speakers (summarized below) joined us, along with many more people with experience in the audience, mostly in-person at Hoover and some on-line. The audience included current policy makers, former policy makers, academics who focus on monetary and fiscal issues, people from the financial markets, and, very important, members of the media both printed press and TV.

Presenters at “How Monetary Policy Got Behind the Curve and How to Get Back“

What Monetary Policy Rules and Strategies Say

Papers be Richard Clarida, Lawrence Summers, and John Taylor

Chair: Tom Stephenson,

Fiscal Policy and Other Explanations

Papers by John Cochrane, Tyler Goodspeed, and Beth Hammack

Chair: Charles Plosser

The Fed’s Delayed Exits from Monetary Ease

Paper by Michael Bordo and Mickey Levy with discussion by Jennifer Burns

Chair: Kevin Warsh

Inflation Risks

Paper by Ricardo Reis with discussion by Volker Wieland

Chair: Arvind Krishnamurthy,

Three World Wars: Fiscal-Monetary Consequences

Paper by George Hall and Thomas Sargent with discussion by Ellen McGrattan

Chair: John Lipsky

Toward a Monetary Policy Strategy

Papers by James Bullard, Randal Quarles, and Christopher Waller

Chair: Joshua Rauh

As with earlier Hoover monetary policy conferences, there will soon be a book outlining the timely and well-reasoned arguments of the conference papers and the discussion. But the excellent and extensive press coverage of the conference already gives a good sense of the overall themes. Here is a summary and a few quotes from the press.

News Articles

In an article published in the New York Times, entitled “Fed Officials Are on the Defensive as High Inflation Lingers” by Jeanna Smialek, and subtitled “Critics have accused the Federal Reserve of not reacting quickly enough to tame rising prices. On Friday, a Fed governor explained why it took so long.” The article mentions that “Christopher Waller, a governor at the Federal Reserve, faced an uncomfortable task on Friday night: He delivered remarks at a conference packed with leading academic economists titled, suggestively, “How Monetary Policy Got Behind the Curve and How to Get Back.” The article added that “Friday’s event, at Stanford University’s Hoover Institute, was the clearest expression yet of the growing sense of skepticism around the Fed’s recent policy approach.” See https://www.nytimes.com/2022/05/06/business/economy/fed-inflation-waller.html

Nick Timiraos published an article in the Wall Street Journal entitled “Ex-Fed Official Says Rates of at Least 3.5% Will Be Needed to Slow Inflation,” and subtitled “Richard Clarida expects central bank will be required to pursue increases ‘well into restrictive territory.’” “Even under a plausible best-case scenario in which most of the inflation overshoot last year and this year turns out to have been transitory, the funds rate will, I believe, ultimately need to be raised well into restrictive territory,” said Richard Clarida, referring to the federal-funds rate, in remarks prepared for delivery Friday at a conference at Stanford University’s Hoover Institution. https://www.wsj.com/articles/ex-fed-official-says-rates-of-at-least-3-5-will-be-needed-to-slow-inflation-1165182940

Ann Saphir and Lindsay Dunsmuir of Reuters published an article entitled Former Fed policymakers call for sharp U.S. rate hikes, warn of recession, saying that “Clarida, speaking Friday to a conference at Stanford University’s Hoover Institution, said the Fed will need to raise rates to “at least” 3.5% if not higher to bring inflation back down to its 2% goal.”…“If inflation, now at 6.6% by the Fed’s yardstick, is a year from now still running at 3%, “simple and compelling” arithmetic by a widely cited policy guide known as the “Taylor rule” means rates will need to rise to 4%, he said. Clarida made the remarks at a conference convened by Stanford’s John Taylor, the author of that rule.” https://www.reuters.com/world/us/former-fed-policymakers-call-sharp-us-rate-hikes-warn-recession-2022-05-06/

Jonnelle Marte and Rich Miller wrote a Bloomberg piece entitled in Fed Officials Defend Policies, Say Forward Guidance Is Working, saying “that Waller, Bullard say Fed guidance has had substantial impact. “Two of the Federal Reserve’s most hawkish policy makers defended the central bank on Friday against charges that it had fallen well behind the curve in fighting inflation.” “Credible forward guidance means that market interest rates have increased significantly before tangible Fed action, Bullard said in remarks prepared for a conference hosted by the Hoover Institution at Stanford University. It provides another definition of ‘behind the curve,’ and the Fed is not far behind based on this definition.” … “A series of speakers at the conference, entitled “How Monetary Policy Got Behind the Curve and How to Get Back”, criticized the Fed for being late to react to rising inflation, which is now at a four-decade high.” https://www.bloomberg.com/news/articles/2022-05-06/fed-s-waller-and-bullard-defend-policies-say-guidance-working

Greg Robb, in an article on Market Watch over at Barron’s captured the theme of his piece with the straightforward title Fed Hawks Say They’re Not so Behind the Curve Combatting Inflation https://www.barrons.com/articles/federal-reserve-inflation-rate-hikes-51651947271?mod=hp_DAY_1

And Colin Lodewick of Fortune touched on similar themes in an article entitled The Fed just increased interest rates by the most since 2000. A former official says it’s not nearly enough to slow inflation https://fortune.com/2022/05/06/former-fed-official-richard-clarida-interest-rate-hikes-not-enough-slow-inflation/

Other Media at the Conference, including Television

There was also lot of TV media set up on the patio outside the auditorium where the conference was held. These included a fascinating Bloomberg TV interview of Lawrence Summers and Niall Ferguson conducted by David Westin. Check it out on YouTube.

Another example was Michael McKee’s interview of me on “Bloomberg Markets: The Close,” also set up out on the patio next to the conference at the Hoover Institution with Caroline Hyde, Romaine Bostick and Taylor Riggs. Watch the video from 2:45 to 10:55 https://www.bloomberg.com/news/videos/2022-05-07/bloomberg-markets-the-close-5-06-2022

Actual Statements by members of the Federal Open Market Committee

While the press coverage was excellent, it is very important to read the actual statements by those at the conference, especially the current members of the Federal Open Market Committee who spoke at the conference. These are now available at the Federal Reserves or at the St. Louis Federal Reserve Bank web pages, and include:

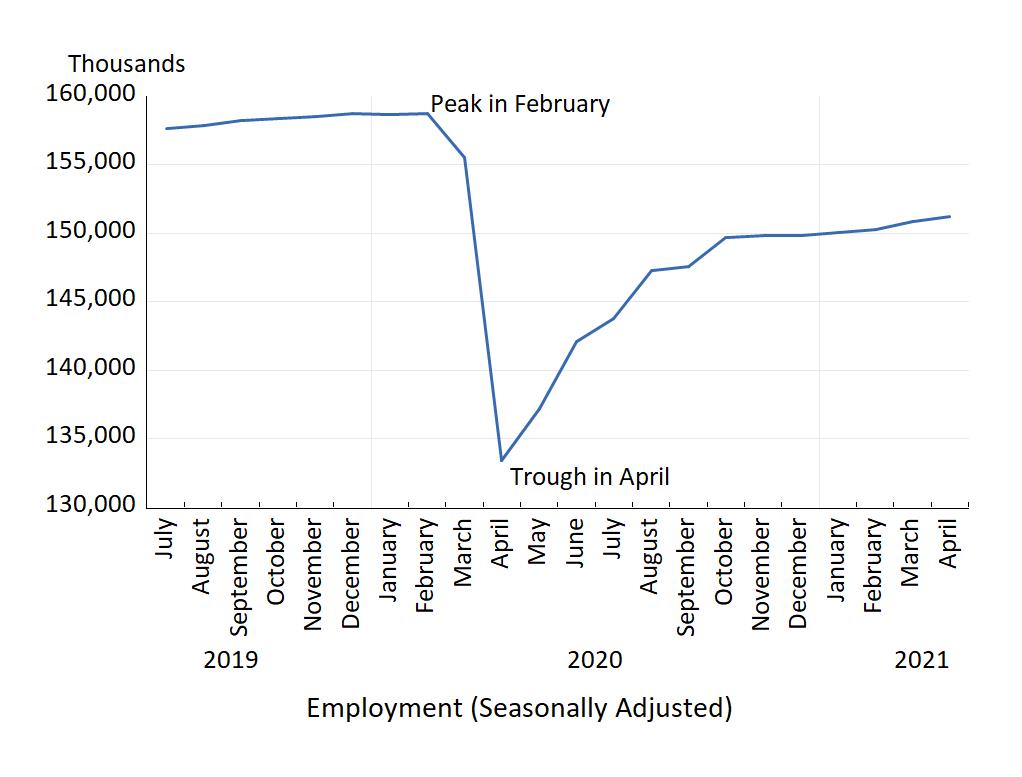

Christopher J. Waller, now Federal Reserve Governor, who published Reflections on Monetary Policy in 2021, a written version of the talk he gave at the Hoover Conference.

James Bullard, President and CEO of the Federal Reserve Bank of St, Louis, “Is the Fed “Behind the Curve”? Two Interpretations.” The press release about the talk from the Federal Reserve Bank of St. Louis is here. https://www.stlouisfed.org/news-releases/2022/05/06/bullard-discusses-is-the-fed-behind-the-curve-two-interpretations

These are all worth reading and watching, and the forthcoming book will be too.