This week, on January 4, 2023, John Cochrane, Mickey Levy, Kevin Warsh & I spoke by Zoom at a policy roundtable on the increase in inflation and possible causes at the Hoover Economic Policy Working Group. It was an important follow-up to a meeting we held a year ago on January 5, 2022. Each of us covered similar ground: Cochrane talked about the fiscal side, Levy about inflation measures, Warsh about regime change, and Taylor about the big deviations in policy from standard monetary policy rules. Many of us had written about these issues since the last group session in January 2002. The aim was to give different perspectives on a common theme: that recent high inflation over the past year was brought about by an extra low policy interest rate, the high money growth, or the big balance sheet expansion.

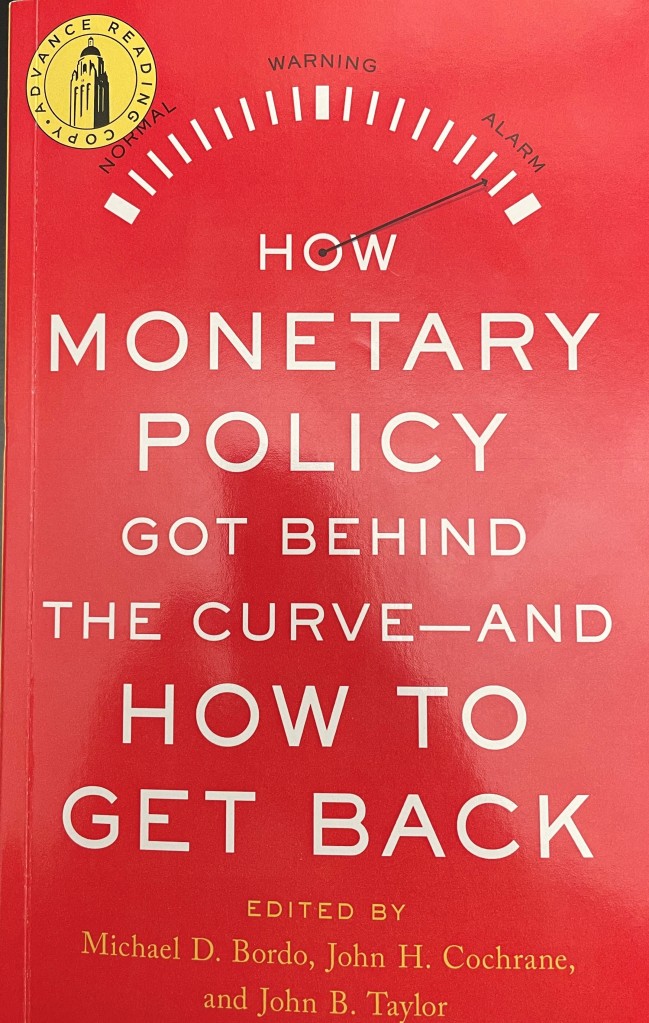

I talked about how the Fed got into this difficult situation, and I hope that brought back memories of the 1970s where the critique was similar, but also different in important ways. All agreed a year ago that the Fed was behind the curve, and the question was when and how rapidly to get back on track. This was also the title of the Hoover Institution book entitled: “How Monetary Policy Got Behind the Curve — and How to Get Back,” published in May 2022, and edited by Michael Bordo, John Cochrane and John Taylor.

During the past year the Fed had indeed tried to get back on track, and it increased the federal funds rate from near zero to a bit over 4 percent. Some–including most of the speakers and participants–said that more was needed. The event again had many commentators and guests–including monetary experts such as Mervyn King, Andrew Levin, Bob Hall, and David Papell, who spoke out on this theme from different perspectives.

The whole Zoom event from January 4, 2023 was video-recorded, and can be found here: https://www.hoover.org/events/policy-seminar-john-cochrane-mickey-levy-kevin-warsh-and-john-taylor along with a list of participants and the slide presentations. It is fascinating to compare the session this year (Jan 2023) with the session last year (Jan 2022) which had the same group of speakers and many of the same participants: https://www.hoover.org/events/roundtable-economic-policy-john-cochrane-mickey-levy-kevin-warsh-and-john-taylor It is most fascinating in my view because the theme is similar except that Fed has begun to catch up. How will the economy–and indeed the world economy–look in January 2024? That depends on the Fed and other central banks.